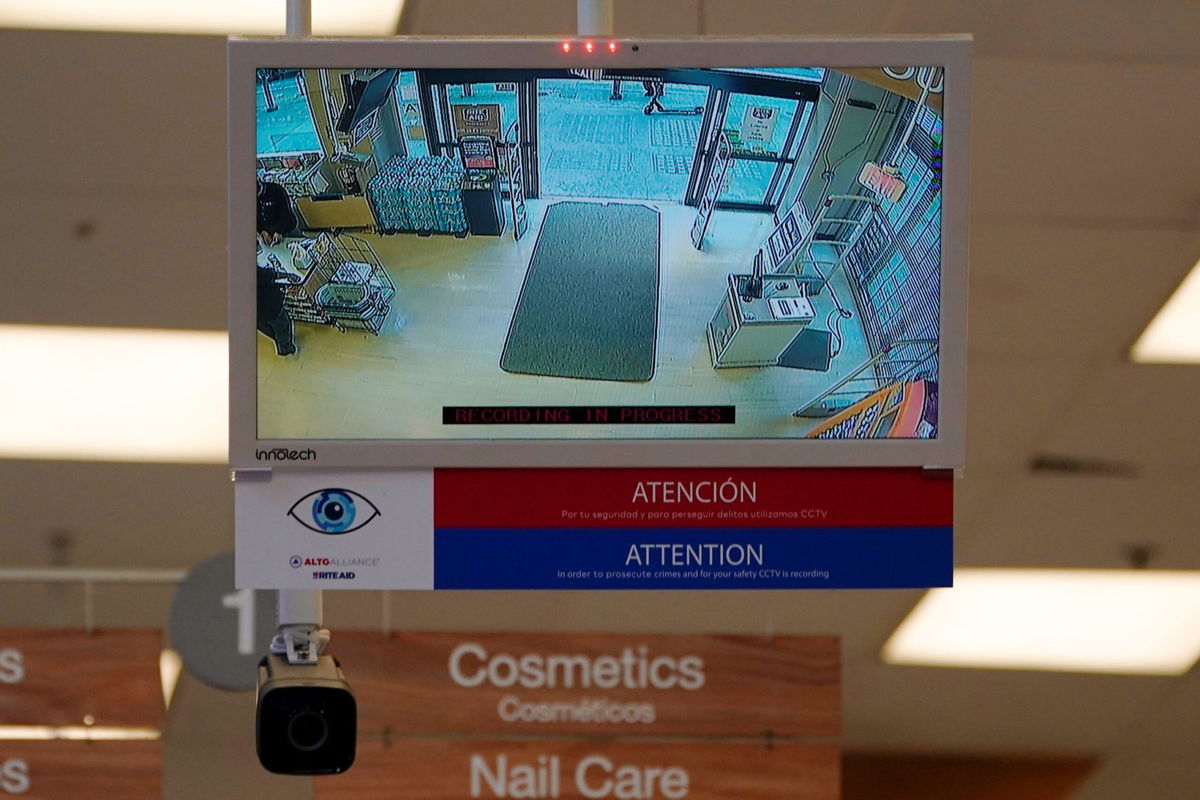

Rite Aid’s ‘reckless’ use of facial recognition got it banned from using the technology in stores for five years

A Rite Aid store in New York.

By Jordan Valinsky, CNN

New York (CNN) — Rite Aid has agreed to a five-year ban from using facial recognition technology after the Federal Trade Commission found that the chain falsely accused customers of crimes and unfairly targeted people of color.

The FTC and Rite Aid reached a settlement Tuesday after a complaint accused the chain of using artificial intelligence-based software in hundreds of stores to identify people Rite Aid “deemed likely to engage in shoplifting or other criminal behavior” and kick them out of stores – or prevent them from coming inside.

But the imperfect technology led employees to act on false-positive alerts, which wrongly identified customers as criminals. In some cases, the FTC accused Rite Aid employees of publicly accusing people of criminal activity in front of friends, family and strangers. Some customers were wrongly detained and subjected to searches, the FTC said.

Rite Aid said in a statement that it’s “pleased to reach an agreement” with the FTC but added that “we fundamentally disagree with the facial recognition allegations in the agency’s complaint.” The tech was a pilot program and was only used in a “limited number of stores. The test stopped more than three years ago before the FTC’s investigation began.

The FTC’s legal filing, which contains customer complaints spanning from 2012 to 2020, said that some customers were “erroneously accused by employees of wrongdoing” because Rite Aid’s technology “falsely flagged the consumers as matching someone who had previously been identified as a shoplifter or other troublemaker.” The facial recognition software was mostly deployed in neighborhoods with large Black, Latino and Asian communities, the FTC said.

“Rite Aid’s reckless use of facial surveillance systems left its customers facing humiliation and other harms, and its order violations put consumers’ sensitive information at risk,” said Samuel Levine, director of the FTC’s Bureau of Consumer Protection, in a release.

The proposed order means that Rite Aid will have to “implement comprehensive safeguards” to prevent harm of its customers when deploying the AI-based technology to its locations. The order also prevents Rite Aid from using the tech if it “cannot control potential risks to consumers.”

“The safety of our associates and customers is paramount,” Rite Aid said. “As part of the agreement with the FTC, we will continue to enhance and formalize the practices and policies of our comprehensive information security program.”

The pilot program involved creating a database of thousands of low-quality pictures from store cameras and employees’ phones of customer faces, which were labeled as “persons of interest” because Rite Aid thought they were engaged in criminal activity its stores. The FTC is requiring Rite Aid to delete those pictures and notify customers that they’re in a database.

Since Rite Aid is engaged in bankruptcy proceedings, the FTC said its orders would go into effect after approval from the courts.

The-CNN-Wire

™ & © 2023 Cable News Network, Inc., a Warner Bros. Discovery Company. All rights reserved.